Istio 1.0学习笔记(八):使用Helm在Kubernetes集群上部署Istio

📅 2019-01-16 | 🖱️

1.环境信息 #

本文将实践使用Heml在Kubernetes 1.13上安装Istio 1.0.5,具体的环境信息如下:

1helm version

2Client: &version.Version{SemVer:"v2.12.2", GitCommit:"7d2b0c73d734f6586ed222a567c5d103fed435be", GitTreeState:"clean"}

3Server: &version.Version{SemVer:"v2.12.2", GitCommit:"7d2b0c73d734f6586ed222a567c5d103fed435be", GitTreeState:"clean"}

4

5kubectl version

6Client Version: version.Info{Major:"1", Minor:"13", GitVersion:"v1.13.2", GitCommit:"ddf47ac13c1a9483ea035a79cd7c10005ff21a6d", GitTreeState:"clean", BuildDate:"2018-12-03T21:04:45Z", GoVersion:"go1.11.2", Compiler:"gc", Platform:"linux/amd64"}

7Server Version: version.Info{Major:"1", Minor:"13", GitVersion:"v1.13.2", GitCommit:"cff46ab41ff0bb44d8584413b598ad8360ec1def", GitTreeState:"clean", BuildDate:"2019-01-10T23:28:14Z", GoVersion:"go1.11.4", Compiler:"gc", Platform:"linux/amd64"}

下载并解压缩istio的发布包:

1wget https://github.com/istio/istio/releases/download/1.0.5/istio-1.0.5-linux.tar.gz

2tar -zxvf istio-1.0.5-linux.tar.gz

3cd istio-1.0.5

解压后的目录结构如下:

1istio-1.0.5

2├── bin

3│ └── istioctl

4├── install

5│ ├── consul

6│ ├── gcp

7│ ├── kubernetes

8│ ├── README.md

9│ └── tools

10├── istio.VERSION

11├── LICENSE

12├── README.md

13├── samples

14│ ├── bookinfo

15│ ├── certs

16│ ├── CONFIG-MIGRATION.md

17│ ├── health-check

18│ ├── helloworld

19│ ├── httpbin

20│ ├── https

21│ ├── kubernetes-blog

22│ ├── rawvm

23│ ├── README.md

24│ ├── sleep

25│ └── websockets

26└── tools

27 ├── adsload

28 ├── cache_buster.yaml

29 ├── convert_perf_results.py

30 ├── deb

31 ├── dump_kubernetes.sh

32 ├── githubContrib

33 ├── hyperistio

34 ├── istio-docker.mk

35 ├── license

36 ├── perf_istio_rules.yaml

37 ├── perf_k8svcs.yaml

38 ├── perf_setup.svg

39 ├── README.md

40 ├── rules.yml

41 ├── run_canonical_perf_tests.sh

42 ├── setup_perf_cluster.sh

43 ├── setup_run

44 ├── update_all

45 └── vagrant

- 其中

istio-1.0.5/install/kubernetes/helm包含了使用helm安装istio所需的chart - bin目录中的

istioctl是istio的客户端文件,用来手动将Envoy作为sidecar proxy注入,以及对路由规则和策略的管理

将istioctl加入到PATH环境变量,这里直接将其拷贝到/usr/local/bin下:

1istioctl version

2Version: 1.0.5

3GitRevision: c1707e45e71c75d74bf3a5dec8c7086f32f32fad

4User: root@6f6ea1061f2b

5Hub: docker.io/istio

6GolangVersion: go1.10.4

7BuildStatus: Clean

2.使用Helm安装Istio #

因为这里的Helm是2.12.2版本高于2.10,所以不再需要手动使用kubectl安装Istio的CRD。

Istio的Chart在istio-1.0.5/install/kubernetes/helm目录中,这个Chart包含了下面的组件:

- ingress

- ingressgateway

- egressgateway

- sidecarInjectorWebhook

- galley

- mixer

- pilot

- security(citadel)

- grafana

- prometheus

- servicegraph

- tracing(jaeger)

- kiali

通过各个组件在vaule file的enabled flag启用或禁用,下面创建名称为istio.yaml的vaule file,将几个默认禁用的组件也启用:

1tracing:

2 enabled: true

3servicegraph:

4 enabled: true

5kiali:

6 enabled: true

7grafana:

8 enabled: true

直接使用helm安装Istio。

1helm install install/kubernetes/helm/istio --name istio --namespace istio-system -f istio.yaml

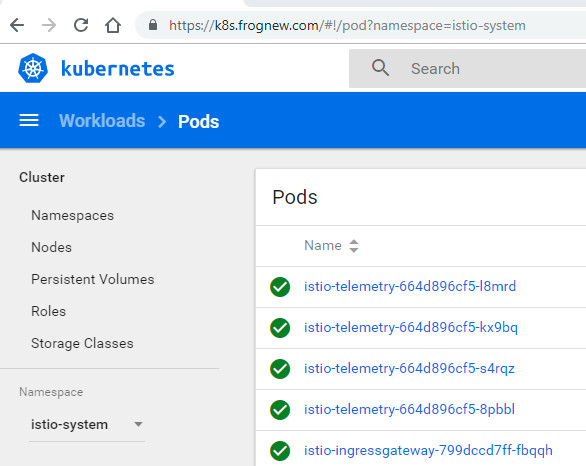

安装完成后确认各个组件的Pod正常运行:

1 kubectl get pod -n istio-system

2NAME READY STATUS RESTARTS AGE

3grafana-59b8896965-lngmf 1/1 Running 0 43m

4istio-citadel-856f994c58-q7km9 1/1 Running 0 43m

5istio-egressgateway-5649fcf57-m52zh 1/1 Running 0 43m

6istio-galley-7665f65c9c-2xh59 1/1 Running 0 43m

7istio-ingressgateway-6755b9bbf6-x5gzb 1/1 Running 0 43m

8istio-pilot-56855d999b-rh7w7 2/2 Running 0 43m

9istio-policy-6fcb6d655f-7dfd5 2/2 Running 0 43m

10istio-sidecar-injector-768c79f7bf-7887s 1/1 Running 0 43m

11istio-telemetry-664d896cf5-wnfg4 2/2 Running 0 43m

12istio-tracing-6b994895fd-m9j2s 1/1 Running 0 43m

13kiali-67c69889b5-2lvq5 1/1 Running 0 43m

14prometheus-76b7745b64-5z2cw 1/1 Running 0 43m

15servicegraph-5c4485945b-gcrj8 1/1 Running 0 43m

3.使用Istio Gateway暴露各个辅助组件 #

完成Istio的安装后,可以看到安装的组件除了Istio架构中的数据平面和控制平面的各个核心组件,详见Istio 1.0学习笔记(五):Istio的基本概念外,还部署了Prometheus、Grafana、Jaeger、Kiali等辅助组件。 在云原生生态中,我们已经对这些组件很熟悉了。

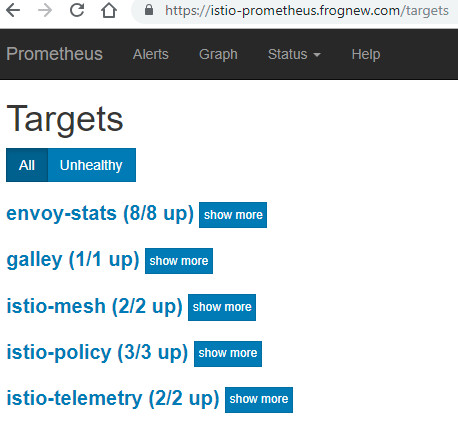

- Prometheus:监控系统,收集Istio的监控数据

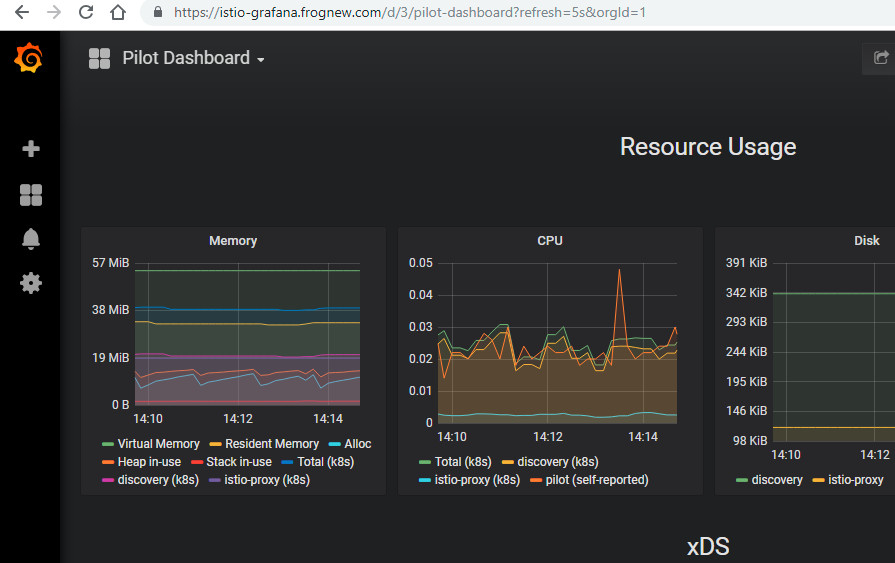

- Grafana:监控信息的图表展现,Istio部署的Grafana为我们内置各个组件相关的Dashboard

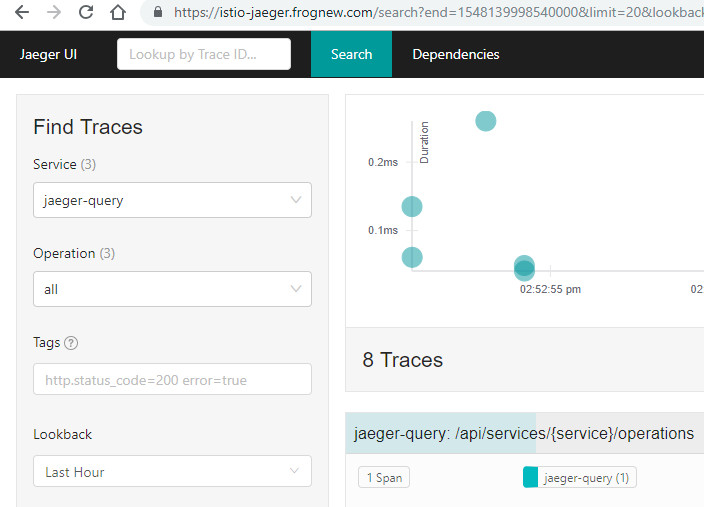

- Jaeger:分布式跟踪系统,Istio中集成Jaeger可以对基于Istio的微服务实现调用链跟踪、依赖分析,为性能管理提供支持

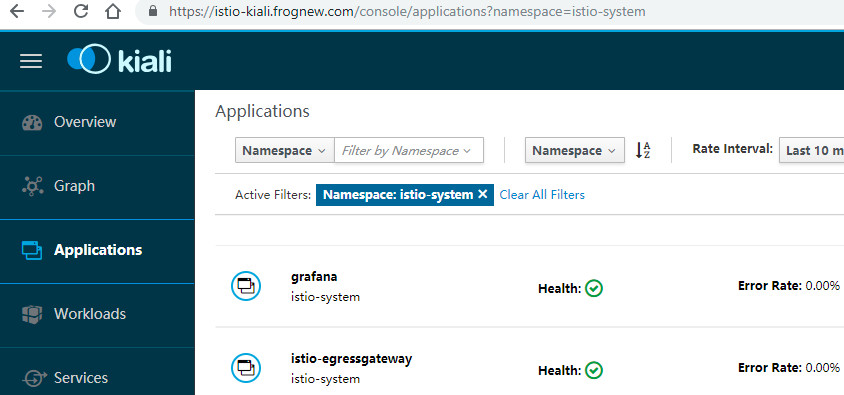

- kiali:kiali作为Istio的可观测工具,可以认为是Istio的UI,可以展现服务的网络拓扑、服务的容错情况(超时、重试、短路等)、分布式跟踪(通过Jaeger Tracing)等

这些辅助组件都有自己的web界面,这里我们使用Isito Gateway的方式将这些组件暴露到集群外,以便在集群外部访问。

3.1 准备工作,配置istio-ingressgateway #

在Kubernetes中,可以使用Ingress资源将集群内部的Service暴露到集群外部,而Istio这个Service Mesh则推荐使用另Istio Gateway这个更好的配置模型。

我们首先确保Istio Gateway被暴露到集群外部。这个和Kubernetes中暴露Ingress Controller类似,有很多种方式,如NodePort,LoadBalancer,或直接开启hostNetwork: true等等。

这里我们使用以hostNetwork: true运行Istio Gateway容器。因为istio 1.0.5的helm chart中创建的 istio-ingressgateway Service是LoadBalancer类型的,而且开放了很多NodePort,同时没有提供hostNetwork相关选项。我们这里通过kubect edit命令重新配置Istio Gateway的Deloyment和Service.

修改istio-ingressgateway的Service,将类型改成ClusterIP,并删除各个nodePort:

1kubectl edit svc istio-ingressgateway -n istio-system

2

3......

4spec:

5 type: ClusterIP

6 ......

7 ports:

8 - name: http2

9 port: 80

10 protocol: TCP

11 targetPort: 80

12 - name: https

13 port: 443

14 protocol: TCP

15 targetPort: 443

16 - name: tcp

17 port: 31400

18 protocol: TCP

19 targetPort: 31400

20 - name: tcp-pilot-grpc-tls

21 port: 15011

22 protocol: TCP

23 targetPort: 15011

24 - name: tcp-citadel-grpc-tls

25 port: 8060

26 protocol: TCP

27 targetPort: 8060

28 - name: tcp-dns-tls

29 port: 853

30 protocol: TCP

31 targetPort: 853

32 - name: http2-prometheus

33 port: 15030

34 protocol: TCP

35 targetPort: 15030

36 - name: http2-grafana

37 port: 15031

38 protocol: TCP

39 targetPort: 15031

40......

修改istio-ingressgateway的Deployment,针对spec.template.spec设置hostNetwork: true、dnsPolicy: ClusterFirstWithHostNet。同时加入节点亲和性nodeAffinity,Pod反亲和性podAntiAffinity等配置确保istio-ingressgateway容器被调度到集群中的边缘节点上。

1kubectl edit deploy istio-ingressgateway -n istio-system

2

3apiVersion: extensions/v1beta1

4kind: Deployment

5metadata:

6......

7spec:

8 replicas: 2

9 ......

10 template:

11 spec:

12 ......

13 hostNetwork: true

14 dnsPolicy: ClusterFirstWithHostNet

15 affinity:

16 nodeAffinity:

17 requiredDuringSchedulingIgnoredDuringExecution:

18 nodeSelectorTerms:

19 - matchExpressions:

20 - key: node-role.kubernetes.io/edge

21 operator: Exists

22 podAntiAffinity:

23 requiredDuringSchedulingIgnoredDuringExecution:

24 - labelSelector:

25 matchExpressions:

26 - key: app

27 operator: In

28 values:

29 - istio-ingressgateway

30 - key: istio

31 operator: In

32 values:

33 - ingressgateway

34 topologyKey: kubernetes.io/hostname

35 tolerations:

36 - key: node-role.kubernetes.io/master

37 operator: Exists

38 effect: NoSchedule

这样从集群外部访问Istio Gateway的IP地址就是边缘节点的IP地址了,如果有多个边缘节点,可以使用Keepalived实现多个边缘节点争抢一个VIP的形式,实现高可用。这个和Kubernetes Ingress边缘节点高可用实现方式一致,这里不再赘述,可参考Bare metal环境下Kubernetes Ingress边缘节点的高可用,Ingress Controller使用hostNetwork。

注意因为helm部署的istio-ingressgateway,同时为其创建了HPA,因为我们这里使用hostNetwork,即将istio-ingressgateway固定调度到集群中的所有边缘节点上,所以还需要手动删除这个HPA:

1kubectl delete HorizontalPodAutoscaler istio-ingressgateway -n istio-system

接下来将所需要的SSL证书存放到istio-system命名空间中,要求名称必须是istio-ingressgateway-certs:

1kubectl create secret tls istio-ingressgateway-certs --cert=fullchain.pem --key=privkey.pem -n istio-system

3.2 创建各个组件所需要的gateway #

1apiVersion: networking.istio.io/v1alpha3

2kind: Gateway

3metadata:

4 name: frognew-gateway

5 namespace: istio-system

6spec:

7 selector:

8 istio: ingressgateway

9 servers:

10 - port:

11 number: 80

12 name: http

13 protocol: HTTP

14 tls:

15 httpsRedirect: true

16 hosts:

17 - istio-prometheus.frognew.com

18 - istio-grafana.frognew.com

19 - istio-jaeger.frognew.com

20 - istio-kiali.frognew.com

21 - port:

22 number: 443

23 name: https

24 protocol: HTTPS

25 tls:

26 mode: SIMPLE

27 serverCertificate: /etc/istio/ingressgateway-certs/tls.crt

28 privateKey: /etc/istio/ingressgateway-certs/tls.key

29 hosts:

30 - istio-prometheus.frognew.com

31 - istio-grafana.frognew.com

32 - istio-jaeger.frognew.com

33 - istio-kiali.frognew.com

3.3 使用istio gateway暴露Prometheus #

创建Prometheus的VirtualService:

1apiVersion: networking.istio.io/v1alpha3

2kind: VirtualService

3metadata:

4 name: prometheus

5 namespace: istio-system

6spec:

7 hosts:

8 - istio-prometheus.frognew.com

9 gateways:

10 - frognew-gateway

11 http:

12 - match:

13 - uri:

14 prefix: /

15 route:

16 - destination:

17 port:

18 number: 9090

19 host: prometheus

3.4 使用istio gateway暴露Grafana #

创建Grafana的VirtualService:

1apiVersion: networking.istio.io/v1alpha3

2kind: VirtualService

3metadata:

4 name: grafana

5 namespace: istio-system

6spec:

7 hosts:

8 - istio-grafana.frognew.com

9 gateways:

10 - frognew-gateway

11 http:

12 - match:

13 - uri:

14 prefix: /

15 route:

16 - destination:

17 port:

18 number: 3000

19 host: grafana

3.5 使用istio gateway暴露Jaeger #

创建Jaeger的VirtualService:

1apiVersion: networking.istio.io/v1alpha3

2kind: VirtualService

3metadata:

4 name: jaeger-query

5 namespace: istio-system

6spec:

7 hosts:

8 - istio-jaeger.frognew.com

9 gateways:

10 - frognew-gateway

11 http:

12 - match:

13 - uri:

14 prefix: /

15 route:

16 - destination:

17 port:

18 number: 16686

19 host: jaeger-query

3.6 使用istio gateway暴露Kiali #

创建Kiali的VirtualService:

1apiVersion: networking.istio.io/v1alpha3

2kind: VirtualService

3metadata:

4 name: kiali

5 namespace: istio-system

6spec:

7 hosts:

8 - istio-kiali.frognew.com

9 gateways:

10 - frognew-gateway

11 http:

12 - match:

13 - uri:

14 prefix: /

15 route:

16 - destination:

17 port:

18 number: 20001

19 host: kiali

默认用户名密码admin/admin。

3.7 使用istio gateway暴露k8s dashboard #

因为我们不再使用Kubernetes的Ingress Nginx,而改为使用Istio Gateway,所以也需要使用istio gateway暴露k8s dashboard。

因为Kubernetes Dashboard部署在kube-system命名空间中,所以需要在kube-system命名空间中创建Gateway:

创建kube-system命名空间中的Gateway资源:

1apiVersion: networking.istio.io/v1alpha3

2kind: Gateway

3metadata:

4 name: frognew-gateway

5 namespace: kube-system

6spec:

7 selector:

8 istio: ingressgateway

9 servers:

10 - port:

11 number: 80

12 name: http

13 protocol: HTTP

14 tls:

15 httpsRedirect: true

16 hosts:

17 - k8s.frognew.com

18 - port:

19 number: 443

20 name: https

21 protocol: HTTPS

22 tls:

23 mode: SIMPLE

24 serverCertificate: /etc/istio/ingressgateway-certs/tls.crt

25 privateKey: /etc/istio/ingressgateway-certs/tls.key

26 hosts:

27 - k8s.frognew.com

可以看到Gateway中针对k8s.frognew.com启用了TLS,简单起见在集群中部署的k8s dashboard不再启用TLS,对于使用helm chart部署的dashboard,使用下面的自定义值文件即可:

1enableInsecureLogin: True

2image:

3 repository: k8s.gcr.io/kubernetes-dashboard-amd64

4 tag: v1.10.1

5rbac:

6 clusterAdminRole: true

创建k8s dashboard的VirtualService:

1apiVersion: networking.istio.io/v1alpha3

2kind: VirtualService

3metadata:

4 name: k8s-dashboard

5 namespace: kube-system

6spec:

7 hosts:

8 - k8s.frognew.com

9 gateways:

10 - frognew-gateway

11 http:

12 - match:

13 - uri:

14 prefix: /

15 route:

16 - destination:

17 port:

18 number: 443

19 host: kubernetes-dashboard

4.总结 #

本文实践了使用istio官方提供的helm chart在Kubernetes上部署Istio 1.0.5的过程,并使用Istio Gateway将Istio集成的Prometheus、Grafana、Jaeger、Kiali等辅助组件暴露到集群外部。 Istio Gateway用于控制边缘服务的暴露,即将服务暴露到集群(网格)外部,结合使用VirtualService对进入集群的流量进行了管理。