Kubernetes Ingress实战(六):Bare metal环境下Kubernetes Ingress边缘节点的高可用,Ingress Controller使用hostNetwork

📅 2018-12-13 | 🖱️

- Kubernetes Ingress实战(一):在Kubernetes集群中部署NGINX Ingress Controller

- Kubernetes Ingress实战(二):使用Ingress将第一个HTTP服务暴露到集群外部

- Kubernetes Ingress实战(三):使用Ingress将gRPC服务暴露到Kubernetes集群外部

- Kubernetes Ingress实战(四):Bare metal环境下Kubernetes Ingress边缘节点的高可用

- Kubernetes Ingress实战(五):Bare metal环境下Kubernetes Ingress边缘节点的高可用(基于IPVS)

- Kubernetes Ingress实战(六):Bare metal环境下Kubernetes Ingress边缘节点的高可用,Ingress Controller使用hostNetwork

前面在Kubernetes Ingress实战(四):Bare metal环境下Kubernetes Ingress边缘节点的高可用

和Kubernetes Ingress实战(五):Bare metal环境下Kubernetes Ingress边缘节点的高可用(基于IPVS)中Bare metal环境下Kubernetes Ingress边缘节点的高可用的两种方法,都是基于externalIps来暴露nginx-ingress-controller的Service到集群外部的,前者的kube-proxy没有开启IPVS,后者的kube-proxy开启了IPVS。

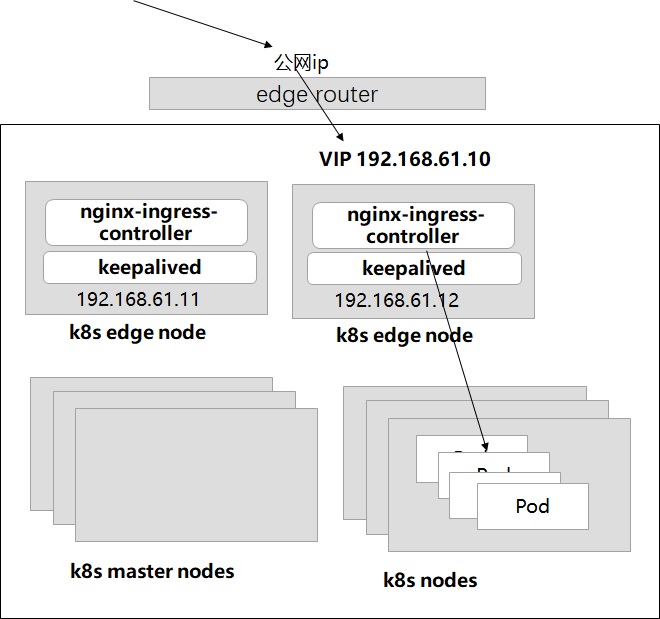

Kubernetes的边缘节点(edge node)一般是需要使用独立的服务器的,即只做将集群外部流量接入到集群内部。其实除了使用externalIps来暴露nginx-ingress-controller的Service到集群外部,还可以配置Ingress Controller使用hostNetwork,这样Ingress Controller将监听宿主机edge节点的80和443端口,与 Kubernetes Ingress实战(四)中一样,可以通过热备的形式部署多个边缘节点,多个边缘节点争抢一个VIP的形式。 即基于Keepavlied实现边缘节点的高可用。这种方式也是我们目前线上使用的方式。

如上图所示,部署两个边缘节点192.168.61.11和192.168.61.12。边缘路由器上公网ip映射到内网的VIP 192.168.61.10上。

下面给出helm部署ingress nginx的value file,ingress-nginx.yaml:

1controller:

2 replicaCount: 2

3 hostNetwork: true

4# service:

5# externalIPs:

6# - 192.168.61.10

7 nodeSelector:

8 node-role.kubernetes.io/edge: ''

9 affinity:

10 podAntiAffinity:

11 requiredDuringSchedulingIgnoredDuringExecution:

12 - labelSelector:

13 matchExpressions:

14 - key: app

15 operator: In

16 values:

17 - nginx-ingress

18 - key: component

19 operator: In

20 values:

21 - controller

22 topologyKey: kubernetes.io/hostname

23 tolerations:

24 - key: node-role.kubernetes.io/master

25 operator: Exists

26 effect: NoSchedule

27

28defaultBackend:

29 nodeSelector:

30 node-role.kubernetes.io/edge: ''

31 tolerations:

32 - key: node-role.kubernetes.io/master

33 operator: Exists

34 effect: NoSchedule

nginx ingress controller的副本数replicaCount为2,将被调度到node1和node2这两个边缘节点上。这里并没有指定nginx ingress controller service的externalIPs,而是通过hostNetwork: true设置nginx ingress controller使用宿主机网络。

1helm install stable/nginx-ingress \

2-n nginx-ingress \

3--namespace ingress-nginx \

4-f ingress-nginx.yaml

部署完成后可以看到nginx ingress controller直接监听宿主机的80和443端口。 可以分别使用这两个边缘节点的ip访问ingress controller:

1curl 192.168.61.11

2default backend - 404

3

4curl 192.168.61.12

5default backend - 404

所有边缘节点上安装keepalived,各个边缘节点上的keepalived.conf配置如下:

1! Configuration File for keepalived

2

3global_defs {

4 router_id K8S_INGRESS_DEVEL

5 script_user root

6 enable_script_security

7}

8

9vrrp_script chk_ingress_controller {

10 script "</dev/tcp/127.0.0.1/443"

11 interval 5

12 weight -2

13}

14

15vrrp_instance VI_1 {

16 state MASTER

17 interface eth0

18 virtual_router_id 51

19 priority 100

20 advert_int 1

21 authentication {

22 auth_type PASS

23 auth_pass 1111

24 }

25 track_script {

26 chk_ingress_controller

27 }

28 virtual_ipaddress {

29 192.168.61.10/24 dev eth0 label enp0s8:0

30 }

31}

32

33virtual_server 192.168.61.10 443 {

34 delay_loop 6

35 lb_algo loadbalance

36 lb_kind DR

37 nat_max 255.255.255.0

38 persistence_timeout 50

39 protocol TCP

40

41 real_server 192.168.61.11 443 {

42 weight 1

43 TCP_CHECK {

44 connect_timeout 3

45 }

46 }

47 real_server 192.168.61.12 443 {

48 weight 1

49 TCP_CHECK {

50 connect_timeout 3

51 }

52 }

53

54}

各个边缘节点上的keepalvied启动后,可以在某个边缘节点上运行下面的命令查看到该节点获取到了VIP:

1ip addr sh eth0

23: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

3 link/ether 08:00:27:da:9f:97 brd ff:ff:ff:ff:ff:ff

4 inet 192.168.61.11/24 brd 192.168.61.255 scope global eth0

5 valid_lft forever preferred_lft forever

6 inet 192.168.61.10/24 scope global secondary eth0:0

7 valid_lft forever preferred_lft forever

8 inet6 fe80::1f1d:9638:d8f5:c2a5/64 scope link

9 valid_lft forever preferred_lft forever

10 inet6 fe80::3a95:48aa:a404:4275/64 scope link tentative dadfailed

11 valid_lft forever preferred_lft forever

下面模拟VIP所在边缘节点宕机,将该节点关机。此时在剩余边缘节点上查,确认VIP漂到了剩余边缘节点的某个节点上。