Kubernetes 1.6 高可用集群部署

📅 2017-04-25 | 🖱️

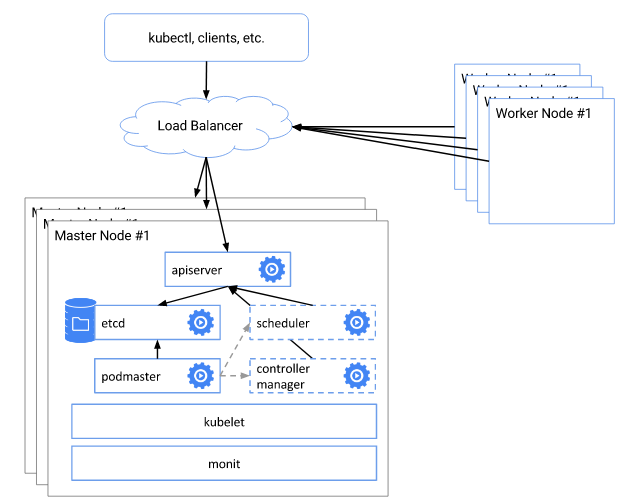

我们已经可以很方便的使用kubeadm快速初始化Kubernetes集群,但kubeadm当前还不能用于生产环境,同时kubeadm初始化的集群的Master节点不是高可用的,后端存储etcd也是单点。因此,本文将基于Kubernetes二进制包手动部署一个高可用的Kubernetes 1.6集群,将启用ApiServer的TLS双向认证和RBAC授权等安全机制。 通过这个手动部署的过程,我们还可以更加深入理解Kubernetes各组件的交互和运行原理。

1. 环境准备 #

1.1 系统环境 #

操作系统CentOS 7.3

1192.168.61.11 node1

2192.168.61.12 node2

3192.168.61.13 node3

1.2 安装包下载 #

从这里下载kubernetes二进制安装包:

1wget https://dl.k8s.io/v1.6.2/kubernetes-server-linux-amd64.tar.gz

2

3tar -zxvf kubernetes-server-linux-amd64.tar.g

1.3 系统配置 #

在各节点创建/etc/sysctl.d/k8s.conf文件,添加如下内容:

1net.bridge.bridge-nf-call-ip6tables = 1

2net.bridge.bridge-nf-call-iptables = 1

执行sysctl -p /etc/sysctl.d/k8s.conf使修改生效。

禁用selinux:

1setenforce 0

2vi /etc/selinux/config

3SELINUX=disabled

1.3 etcd高可用集群部署 #

Kubernetes使用etcd作为ApiServer的持久化存储,因此需要部署高可用的etcd集群,安全要求我们为etcd集群开启TLS通信和认证。 在node1~node3三个节点上部署etcd集群,这里不再展开,可参考前面写的一篇《etcd 3.1 高可用集群搭建》。

最终部署的etcd集群如下:

1node1 https://192.168.61.11:2379

2node2 https://192.168.61.12:2379

3node3 https://192.168.61.13:2379

1.4 在各节点安装Docker #

在集群各个服务器节点上安装Docker。根据最近的文档Kubernetes 1.6还没有针对docker 1.13和最新的docker 17.03上做测试和验证,所以这里安装Kubernetes官方推荐的Docker 1.12版本。Docker的安装比较简单,这里也不展开,可参考《使用kubeadm安装Kubernetes 1.6》中的“安装Docker 1.12”章节。

2. Kubernetes各组件TLS证书和密钥 #

我们将禁用kube-apiserver的HTTP端口,启用Kubernetes集群相关组件的TLS通信和双向认证,下面将使用工具生成各组件TLS的证书和私钥。

我们将使用cfssl生成所需要的私钥和证书。 cfssl在《etcd 3.1 高可用集群搭建》中已经用过,这里直接使用。

2.1 CA证书和私钥 #

复用《etcd 3.1 高可用集群搭建》中使用的ca-config.json:

1{

2 "signing": {

3 "default": {

4 "expiry": "87600h"

5 },

6 "profiles": {

7 "frognew": {

8 "usages": [

9 "signing",

10 "key encipherment",

11 "server auth",

12 "client auth"

13 ],

14 "expiry": "87600h"

15 }

16 }

17 }

18}

创建CA证书签名请求配置ca-csr.json:

1{

2 "CN": "kubernetes",

3 "key": {

4 "algo": "rsa",

5 "size": 2048

6 },

7 "names": [

8 {

9 "C": "CN",

10 "ST": "BeiJing",

11 "L": "BeiJing",

12 "O": "k8s",

13 "OU": "cloudnative"

14 }

15 ]

16}

CN即Common Name,kube-apiserver从证书中提取该字段作为请求的用户名O即Organization,kube-apiserver 从证书中提取该字段作为请求用户所属的组

下面使用cfss生成CA证书和私钥:

1cfssl gencert -initca ca-csr.json | cfssljson -bare ca

1ls

2ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

ca-key.pem和ca.pem需要保存在一个安全的地方,后边会用到。

2.2 创建kube-apiserver证书和私钥 #

创建kube-apiserver证书签名请求配置apiserver-csr.json:

1{

2 "CN": "kubernetes",

3 "hosts": [

4 "127.0.0.1",

5 "192.168.61.10",

6 "192.168.61.11",

7 "192.168.61.12",

8 "192.168.61.13",

9 "10.96.0.1",

10 "kubernetes",

11 "kubernetes.default",

12 "kubernetes.default.svc",

13 "kubernetes.default.svc.cluster",

14 "kubernetes.default.svc.cluster.local"

15 ],

16 "key": {

17 "algo": "rsa",

18 "size": 2048

19 },

20 "names": [

21 {

22 "C": "CN",

23 "ST": "BeiJing",

24 "L": "BeiJing",

25 "O": "k8s",

26 "OU": "cloudnative"

27 }

28 ]

29}

注意上面配置hosts字段中指定授权使用该证书的IP和域名列表,因为现在要生成的证书需要被Kubernetes Master集群各个节点使用,所以这里指定了各个节点的IP和hostname。

另外,我们为了实现kube-apiserver的高可用,我们将在其前面部署一个高可用的负载均衡器,Kubernetes的一些核心组件,通过这个负载均衡器和apiserver进行通信,因此这里在证书请求配置的hosts字段中还要加上这个负载均衡器的IP地址,这里是192.168.61.10。

同时还要指定集群内部kube-apiserver的多个域名和IP地址10.96.0.1(后边kube-apiserver-service-cluster-ip-range=10.96.0.0/12参数的指定网段的第一个IP)。

下面生成kube-apiserver的证书和私钥:

1cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=frognew apiserver-csr.json | cfssljson -bare apiserver

2ls apiserver*

3apiserver.csr apiserver-csr.json apiserver-key.pem apiserver.pem

2.3 创建kubernetes-admin客户端证书和私钥 #

创建admin证书的签名请求配置admin-csr.json:

1{

2 "CN": "kubernetes-admin",

3 "hosts": [

4 "192.168.61.11",

5 "192.168.61.12",

6 "192.168.61.13"

7 ],

8 "key": {

9 "algo": "rsa",

10 "size": 2048

11 },

12 "names": [

13 {

14 "C": "CN",

15 "ST": "BeiJing",

16 "L": "BeiJing",

17 "O": "system:masters",

18 "OU": "cloudnative"

19 }

20 ]

21}

kube-apiserver将提取CN作为客户端的用户名,这里是kubernetes-admin,将提取O作为用户所属的组,这里是system:master。

kube-apiserver预定义了一些 RBAC使用的ClusterRoleBindings,例如 cluster-admin将组system:masters与 ClusterRole cluster-admin绑定,而cluster-admin拥有访问kube-apiserver的所有权限,因此kubernetes-admin这个用户将作为集群的超级管理员。

了解Kubernetes的RBAC和ApiServer的认证的内容可参考之前写过的两篇《Kubernetes 1.6新特性学习:RBAC授权》和《Kubernetes集群安全:Api Server认证》。

下面生成kubernetes-admin的证书和私钥:

1cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=frognew admin-csr.json | cfssljson -bare admin

2

3ls admin*

4admin.csr admin-csr.json admin-key.pem admin.pem

2.4 创建kube-controller-manager客户端证书和私钥 #

下面创建kube-controller-manager客户端访问ApiServer所需的证书和私钥。 controller-manager证书的签名请求配置controller-manager-csr.json:

1{

2 "CN": "system:kube-controller-manager",

3 "hosts": [

4 "192.168.61.11",

5 "192.168.61.12",

6 "192.168.61.13"

7 ],

8 "key": {

9 "algo": "rsa",

10 "size": 2048

11 },

12 "names": [

13 {

14 "C": "CN",

15 "ST": "BeiJing",

16 "L": "BeiJing",

17 "O": "system:kube-controller-manager",

18 "OU": "cloudnative"

19 }

20 ]

21}

kube-apiserver将提取CN作为客户端的用户名,这里是system:kube-controller-manager。

kube-apiserver预定义的 RBAC使用的ClusterRoleBindings system:kube-controller-manager将用户system:kube-controller-manager与ClusterRole system:kube-controller-manager绑定。

下面生成证书和私钥:

1cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=frognew controller-manager-csr.json | cfssljson -bare controller-manager

2

3ls controller-manager*

4controller-manager.csr controller-manager-csr.json controller-manager-key.pem controller-manager.pem

2.5 创建kube-scheduler客户端证书和私钥 #

下面创建kube-scheduler客户端访问ApiServer所需的证书和私钥。 scheduler证书的签名请求配置scheduler-csr.json:

1{

2 "CN": "system:kube-scheduler",

3 "hosts": [

4 "192.168.61.11",

5 "192.168.61.12",

6 "192.168.61.13"

7 ],

8 "key": {

9 "algo": "rsa",

10 "size": 2048

11 },

12 "names": [

13 {

14 "C": "CN",

15 "ST": "BeiJing",

16 "L": "BeiJing",

17 "O": "system:kube-scheduler",

18 "OU": "cloudnative"

19 }

20 ]

21}

kube-scheduler将提取CN作为客户端的用户名,这里是system:kube-scheduler。

kube-apiserver预定义的RBAC使用的ClusterRoleBindings system:kube-scheduler将用户system:kube-scheduler与ClusterRole system:kube-scheduler绑定。

下面生成证书和私钥:

1cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=frognew scheduler-csr.json | cfssljson -bare scheduler

2

3ls scheduler*

4scheduler.csr scheduler-csr.json scheduler-key.pem scheduler.pem

3. Kubernetes Master集群部署 #

部署的Master节点集群由Node1, Node2, Node3三个节点组成,每个节点上部署kube-apiserver,kube-controller-manager,kube-scheduler三个核心组件。 kube-apiserver的3个实例同时提供服务,在其前端部署一个高可用的负载均衡器作为kube-apiserver的地址。 kube-controller-manager和kube-scheduler也是各自3个实例,在同一时刻只能有1个实例工作,这个实例通过选举产生。

将前面生成的 ca.pem, apiserver-key.pem, apiserver.pem, admin.pem, admin-key.pem, controller-manager.pem, controller-manager-key.pem, scheduler-key.pem, scheduler.pem拷贝到各个节点的/etc/kubernetes/pki目录下:

1mkdir -p /etc/kubernetes/pki

2cp {ca.pem,apiserver-key.pem,apiserver.pem,admin.pem, admin-key.pem, controller-manager.pem, controller-manager-key.pem,scheduler-key.pem, scheduler.pem} /etc/kubernetes/pki

将Kubernetes二进制包解压后kubernetes/server/bin中的kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kube-proxy,kubelet拷贝到各节点的/usr/local/bin目录中:

1cp {kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kube-proxy,kubelet} /usr/local/bin/

3.1 kube-apiserver部署 #

创建kube-apiserver的systemd unit文件/usr/lib/systemd/system/kube-apiserver.service,注意替换INTERNAL_IP变量的值:

1mkdir -p /var/log/kubernetes

2export INTERNAL_IP=192.168.61.11

3cat > /usr/lib/systemd/system/kube-apiserver.service <<EOF

4[Unit]

5Description=kube-apiserver

6After=network.target

7After=etcd.service

8

9[Service]

10EnvironmentFile=-/etc/kubernetes/apiserver

11ExecStart=/usr/local/bin/kube-apiserver \

12 --logtostderr=true \

13 --v=0 \

14 --advertise-address=${INTERNAL_IP} \

15 --bind-address=${INTERNAL_IP} \

16 --secure-port=6443 \

17 --insecure-port=0 \

18 --allow-privileged=true \

19 --etcd-servers=https://192.168.61.11:2379,https://192.168.61.12:2379,https://192.168.61.13:2379 \

20 --etcd-cafile=/etc/etcd/ssl/ca.pem \

21 --etcd-certfile=/etc/etcd/ssl/etcd.pem \

22 --etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

23 --storage-backend=etcd3 \

24 --service-cluster-ip-range=10.96.0.0/12 \

25 --tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

26 --tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

27 --client-ca-file=/etc/kubernetes/pki/ca.pem \

28 --service-account-key-file=/etc/kubernetes/pki/ca-key.pem \

29 --experimental-bootstrap-token-auth=true \

30 --apiserver-count=3 \

31 --enable-swagger-ui=true \

32 --admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,PersistentVolumeLabel,DefaultStorageClass,ResourceQuota,DefaultTolerationSeconds \

33 --authorization-mode=RBAC \

34 --audit-log-maxage=30 \

35 --audit-log-maxbackup=3 \

36 --audit-log-maxsize=100 \

37 --audit-log-path=/var/log/kubernetes/audit.log

38Restart=on-failure

39Type=notify

40LimitNOFILE=65536

41

42[Install]

43WantedBy=multi-user.target

44EOF

--insecure-port禁用了不安全的http端口--secure-port指定https安全端口,kube-scheduler、kube-controller-manager、kubelet、kube-proxy、kubectl等组件都将使用安全端口与ApiServer通信(实际上会由我们在前端部署的负载均衡器代理)。--authorization-mode=RBAC表示在安全端口启用RBAC授权模式,在授权过程会拒绝会授权的请求。kube-scheduler、kube-controller-manager、kubelet、kube-proxy、kubectl等组件都使用各自证书或kubeconfig指定相关的User、Group来通过RBAC授权。--admission-control为准入机制,这里配置了NamespaceLifecycle,LimitRanger,ServiceAccount,PersistentVolumeLabel,DefaultStorageClass,ResourceQuota,DefaultTolerationSeconds--service-cluster-ip-range指定Service Cluster IP的地址段,注意该地址段是Kubernetes Service使用的,是虚拟IP,从外部不能路由可达。

启动kube-apiserver:

1systemctl daemon-reload

2systemctl enable kube-apiserver

3systemctl start kube-apiserver

4systemctl status kube-apiserver

3.2 配置kubectl访问apiserver #

我们已经部署了kube-apiserver,并且前面已经kubernetes-admin的证书和私钥,我们将使用这个用户访问ApiServer。

1kubectl --server=https://192.168.61.11:6443 \

2--certificate-authority=/etc/kubernetes/pki/ca.pem \

3--client-certificate=/etc/kubernetes/pki/admin.pem \

4--client-key=/etc/kubernetes/pki/admin-key.pem \

5get componentstatuses

6NAME STATUS MESSAGE ERROR

7scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: getsockopt: connection refused

8controller-manager Unhealthy Get http://127.0.0.1:10252/healthz: dial tcp 127.0.0.1:10252: getsockopt: connection refused

9etcd-0 Healthy {"health": "true"}

10etcd-2 Healthy {"health": "true"}

11etcd-1 Healthy {"health": "true"}

上面我们使用kubectl命令打印出了Kubernetes核心组件的状态,因为我们还没有部署kube-scheduler和controller-manager,所以这两个组件当前是不健康的。

前面面使用kubectl时需要指定ApiServer的地址以及客户端的证书,用起来比较繁琐。 接下来我们创建kubernetes-admin的kubeconfig文件 admin.conf。

1cd /etc/kubernetes

2export KUBE_APISERVER="https://192.168.61.11:6443"

3

4# set-cluster

5kubectl config set-cluster kubernetes \

6 --certificate-authority=/etc/kubernetes/pki/ca.pem \

7 --embed-certs=true \

8 --server=${KUBE_APISERVER} \

9 --kubeconfig=admin.conf

10

11# set-credentials

12kubectl config set-credentials kubernetes-admin \

13 --client-certificate=/etc/kubernetes/pki/admin.pem \

14 --embed-certs=true \

15 --client-key=/etc/kubernetes/pki/admin-key.pem \

16 --kubeconfig=admin.conf

17

18# set-context

19kubectl config set-context kubernetes-admin@kubernetes \

20 --cluster=kubernetes \

21 --user=kubernetes-admin \

22 --kubeconfig=admin.conf

23

24# set default context

25kubectl config use-context kubernetes-admin@kubernetes --kubeconfig=admin.conf

为了使用kubectl访问apiserver使用admin.conf,将其拷贝到$HOME/.kube中并重命名成config:

1cp /etc/kubernetes/admin.conf ~/.kube/config

尝试直接使用kubectl命令访问apiserver:

1kubectl get cs

2NAME STATUS MESSAGE ERROR

3scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: getsockopt: connection refused

4controller-manager Unhealthy Get http://127.0.0.1:10252/healthz: dial tcp 127.0.0.1:10252: getsockopt: connection refused

5etcd-0 Healthy {"health": "true"}

6etcd-1 Healthy {"health": "true"}

7etcd-2 Healthy {"health": "true"}

因为我们已经把kubernetes-admin用户的相关信息和证书保存到了admin.conf中,所以可以删除/etc/kubernetes/pki中的admin.pem和admin-key.pem:

1cd /etc/kubernetes/pki

2rm -f admin.pem admin-key.pem

3.3 kube-apiserver高可用 #

经过前面的步骤,我们已经在node1,node2,node3上部署了Kubernetes Master节点的kube-apiserver,地址如下:

1https://192.168.61.11:6443

2https://192.168.61.12:6443

3https://192.168.61.13:6443

为了实现高可用,可以在前面放一个负载均衡器来代理访问kube-apiserver的请求,再对负载均衡器实现高可用。 例如,HAProxy+Keepavlided,我们在node1~node3这3个Master节点上运行keepalived和HAProxy,3个keepalived争抢同一个VIP地址,3个HAProxy也都尝试去绑定到这个VIP的同一个端口。 例如:192.168.61.10:5000,当某个Haproxy出现异常后,其他节点上的Keepalived会争抢到VIP,同时这个节点上HAProxy会接管流量。

HAProxy+Keepavlided的部署这里不再展开。 最终kube-apiserver高可用的地址为https://192.168.61.10:5000,修改前面$HOME/.kube/config中的clusters:cluster:server的值为这个地址。

1kubectl get cs

2NAME STATUS MESSAGE ERROR

3scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: getsockopt: connection refused

4controller-manager Unhealthy Get http://127.0.0.1:10252/healthz: dial tcp 127.0.0.1:10252: getsockopt: connection refused

5etcd-0 Healthy {"health": "true"}

6etcd-1 Healthy {"health": "true"}

7etcd-2 Healthy {"health": "true"}

3.4 kube-controller-manager部署 #

前面已经创建了controller-manager.pem和controller-manage-key.pem,下面生成controller-manager的kubeconfig文件controller-manager.conf:

1cd /etc/kubernetes

2export KUBE_APISERVER="https://192.168.61.10:5000"

3

4# set-cluster

5kubectl config set-cluster kubernetes \

6 --certificate-authority=/etc/kubernetes/pki/ca.pem \

7 --embed-certs=true \

8 --server=${KUBE_APISERVER} \

9 --kubeconfig=controller-manager.conf

10

11# set-credentials

12kubectl config set-credentials system:kube-controller-manager \

13 --client-certificate=/etc/kubernetes/pki/controller-manager.pem \

14 --embed-certs=true \

15 --client-key=/etc/kubernetes/pki/controller-manager-key.pem \

16 --kubeconfig=controller-manager.conf

17

18# set-context

19kubectl config set-context system:kube-controller-manager@kubernetes \

20 --cluster=kubernetes \

21 --user=system:kube-controller-manager \

22 --kubeconfig=controller-manager.conf

23

24# set default context

25kubectl config use-context system:kube-controller-manager@kubernetes --kubeconfig=controller-manager.conf

controller-manager.conf文件生成后将这个文件分发到各个Master节点的/etc/kubernetes目录下。

创建kube-controller-manager的systemd unit文件/usr/lib/systemd/system/kube-controller-manager.service:

1export KUBE_APISERVER="https://192.168.61.10:5000"

2cat > /usr/lib/systemd/system/kube-controller-manager.service <<EOF

3[Unit]

4Description=kube-controller-manager

5After=network.target

6After=kube-apiserver.service

7

8[Service]

9EnvironmentFile=-/etc/kubernetes/controller-manager

10ExecStart=/usr/local/bin/kube-controller-manager \

11 --logtostderr=true \

12 --v=0 \

13 --master=${KUBE_APISERVER} \

14 --kubeconfig=/etc/kubernetes/controller-manager.conf \

15 --cluster-name=kubernetes \

16 --cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \

17 --cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \

18 --service-account-private-key-file=/etc/kubernetes/pki/ca-key.pem \

19 --root-ca-file=/etc/kubernetes/pki/ca.pem \

20 --insecure-experimental-approve-all-kubelet-csrs-for-group=system:bootstrappers \

21 --use-service-account-credentials=true \

22 --service-cluster-ip-range=10.96.0.0/12 \

23 --cluster-cidr=10.244.0.0/16 \

24 --allocate-node-cidrs=true \

25 --leader-elect=true \

26 --controllers=*,bootstrapsigner,tokencleaner

27Restart=on-failure

28Type=simple

29LimitNOFILE=65536

30

31[Install]

32WantedBy=multi-user.target

33EOF

--service-cluster-ip-range和kube-api-server中指定的参数值一致

在各节点上启动:

1systemctl daemon-reload

2systemctl enable kube-controller-manager

3systemctl start kube-controller-manager

4systemctl status kube-controller-manager

在node1,node2,node3上完成部署和启动后,查看一下状态:

1kubectl get cs

2NAME STATUS MESSAGE ERROR

3scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: getsockopt: connection refused

4controller-manager Healthy ok

5etcd-1 Healthy {"health": "true"}

6etcd-2 Healthy {"health": "true"}

7etcd-0 Healthy {"health": "true"}

到这一步三个Master节点上的kube-controller-manager部署完成,通过选举出一个leader工作。 分别在三个节点查看状态:

1#node1

2systemctl status -l kube-controller-manager

3......

4...... attempting to acquire leader lease...

5

6#node2

7systemctl status -l kube-controller-manager

8......

9...... controllermanager.go:437] Started "endpoint"

10...... controllermanager.go:437] Started "replicationcontroller"

11...... replication_controller.go:150] Starting RC Manager

12...... controllermanager.go:437] Started "replicaset"

13...... replica_set.go:155] Starting ReplicaSet controller

14...... controllermanager.go:437] Started "disruption"

15...... disruption.go:269] Starting disruption controller

16...... controllermanager.go:437] Started "statefuleset"

17...... stateful_set.go:144] Starting statefulset controller

18...... controllermanager.go:437] Started "bootstrapsigner"

19

20#node3

21systemctl status -l kube-controller-manager

22......

23...... attempting to acquire leader lease...

从输出的日志可以看出现在第二个节点上的kube-controller-manager。

3.5 kube-scheduler部署 #

前面已经创建了scheduler.pem和scheduler-key.pem,下面生成kube-scheduler的kubeconfig文件scheduler.conf:

1cd /etc/kubernetes

2export KUBE_APISERVER="https://192.168.61.10:5000"

3

4# set-cluster

5kubectl config set-cluster kubernetes \

6 --certificate-authority=/etc/kubernetes/pki/ca.pem \

7 --embed-certs=true \

8 --server=${KUBE_APISERVER} \

9 --kubeconfig=scheduler.conf

10

11# set-credentials

12kubectl config set-credentials system:kube-scheduler \

13 --client-certificate=/etc/kubernetes/pki/scheduler.pem \

14 --embed-certs=true \

15 --client-key=/etc/kubernetes/pki/scheduler-key.pem \

16 --kubeconfig=scheduler.conf

17

18# set-context

19kubectl config set-context system:kube-scheduler@kubernetes \

20 --cluster=kubernetes \

21 --user=system:kube-scheduler \

22 --kubeconfig=scheduler.conf

23

24# set default context

25kubectl config use-context system:kube-scheduler@kubernetes --kubeconfig=scheduler.conf

scheduler.conf文件生成后将这个文件分发到各个Master节点的/etc/kubernetes目录下。

创建kube-scheduler的systemd unit文件/usr/lib/systemd/system/kube-scheduler.service:

1export KUBE_APISERVER="https://192.168.61.10:5000"

2cat > /usr/lib/systemd/system/kube-scheduler.service <<EOF

3[Unit]

4Description=kube-scheduler

5After=network.target

6After=kube-apiserver.service

7

8[Service]

9EnvironmentFile=-/etc/kubernetes/scheduler

10ExecStart=/usr/local/bin/kube-scheduler \

11 --logtostderr=true \

12 --v=0 \

13 --master=${KUBE_APISERVER} \

14 --kubeconfig=/etc/kubernetes/scheduler.conf \

15 --leader-elect=true

16Restart=on-failure

17Type=simple

18LimitNOFILE=65536

19

20[Install]

21WantedBy=multi-user.target

22EOF

在各节点上启动:

1systemctl daemon-reload

2systemctl enable kube-scheduler

3systemctl start kube-scheduler

4systemctl status kube-scheduler

到这一步三个Master节点上的kube-scheduler部署完成,通过选举出一个leader工作。

查看Kubernetes Master集群各个核心组件的状态全部正常。

1kubectl get cs

2NAME STATUS MESSAGE ERROR

3controller-manager Healthy ok

4scheduler Healthy ok

5etcd-2 Healthy {"health": "true"}

6etcd-0 Healthy {"health": "true"}

7etcd-1 Healthy {"health": "true"}

4. Kubernetes Node节点部署 #

Kubernetes的一个Node节点上需要运行如下组件:

- Docker,这个我们前面在做环境准备的时候已经在各节点部署和运行了

- kubelet

- kube-proxy

下面我们以在node1上为例部署这些组件:

4.1 CNI安装 #

1wget https://github.com/containernetworking/cni/releases/download/v0.5.2/cni-amd64-v0.5.2.tgz

2mkdir -p /opt/cni/bin

3tar -zxvf cni-amd64-v0.5.2.tgz -C /opt/cni/bin

4ls /opt/cni/bin/

5bridge cnitool dhcp flannel host-local ipvlan loopback macvlan noop ptp tuning

4.2 kubelet部署 #

首先确认将kubelet的二进制文件拷贝到/usr/local/bin下。 创建kubelet的工作目录:

1mkdir -p /var/lib/kubelet

安装依赖包:

1yum install ebtables socat util-linux conntrack-tools

接下来创建访问ApiServer的证书和私钥,kubelet-csr.json:

1{

2 "CN": "system:node:node1",

3 "hosts": [

4 ],

5 "key": {

6 "algo": "rsa",

7 "size": 2048

8 },

9 "names": [

10 {

11 "C": "CN",

12 "ST": "BeiJing",

13 "L": "BeiJing",

14 "O": "system:nodes",

15 "OU": "cloudnative"

16 }

17 ]

18}

- 注意CN为用户名,使用

system:node:<node-name> - O为用户组,Kubernetes RBAC定义了ClusterRoleBinding将Group system:nodes和ClusterRole system:node关联。

生成证书和私钥:

1cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=frognew kubelet-csr.json | cfssljson -bare kubelet

2ls kubelet*

3kubelet.csr kubelet-csr.json kubelet-key.pem kubelet.pem

生成kubeconfig文件kubelet.conf:

1cd /etc/kubernetes

2export KUBE_APISERVER="https://192.168.61.10:5000"

3# set-cluster

4kubectl config set-cluster kubernetes \

5 --certificate-authority=/etc/kubernetes/pki/ca.pem \

6 --embed-certs=true \

7 --server=${KUBE_APISERVER} \

8 --kubeconfig=kubelet.conf

9# set-credentials

10kubectl config set-credentials system:node:node1 \

11 --client-certificate=/etc/kubernetes/pki/kubelet.pem \

12 --embed-certs=true \

13 --client-key=/etc/kubernetes/pki/kubelet-key.pem \

14 --kubeconfig=kubelet.conf

15# set-context

16kubectl config set-context system:node:node1@kubernetes \

17 --cluster=kubernetes \

18 --user=system:node:node1 \

19 --kubeconfig=kubelet.conf

20# set default context

21kubectl config use-context system:node:node1@kubernetes --kubeconfig=kubelet.conf

创建kubelet的systemd unit service文件,注意替换NodeIP变量:

1export KUBE_APISERVER="https://192.168.61.10:5000"

2export NodeIP="192.168.61.11"

3cat > /usr/lib/systemd/system/kubelet.service <<EOF

4[Unit]

5Description=kubelet

6After=docker.service

7Requires=docker.service

8

9[Service]

10WorkingDirectory=/var/lib/kubelet

11EnvironmentFile=-/etc/kubernetes/kubelet

12ExecStart=/usr/local/bin/kubelet \

13 --logtostderr=true \

14 --v=0 \

15 --address=${NodeIP} \

16 --api-servers==${KUBE_APISERVER} \

17 --cluster-dns=10.96.0.10 \

18 --cluster-domain=cluster.local \

19 --kubeconfig=/etc/kubernetes/kubelet.conf \

20 --require-kubeconfig=true \

21 --pod-manifest-path=/etc/kubernetes/manifests \

22 --allow-privileged=true \

23 --authorization-mode=AlwaysAllow \

24# --authorization-mode=Webhook \

25# --client-ca-file=/etc/kubernetes/pki/ca.pem \

26 --network-plugin=cni \

27 --cni-conf-dir=/etc/cni/net.d \

28 --cni-bin-dir=/opt/cni/bin

29Restart=on-failure

30

31[Install]

32WantedBy=multi-user.target

33EOF

--pod-manifest-path=/etc/kubernetes/manifests指定了静态Pod定义的目录。可以提前创建好这个目录mkdir -p /etc/kubernetes/manifests。关于静态Pod的内容可参考之前写的一篇《Kubernetes资源对象之Pod》中的静态Pod的内容。--authorization-mode=AlwaysAllow这里并没有启用Kubernetes 1.5的新特性即kubelet API的认证授权功能,先设置AlwaysAllow和我们的线上环境保持一致

启动kubelet:

1systemctl daemon-reload

2systemctl enable kubelet

3systemctl start kubelet

4systemctl status kubelet

1kubectl get nodes

2NAME STATUS AGE VERSION

3node1 NotReady 35s v1.6.2

4.3 kube-proxy部署 #

首先确认已经将kuber-proxy的二进制文件拷贝到/usr/local/bin下。 创建kubelet的工作目录:

1mkdir -p /var/lib/kube-proxy

接下来创建访问ApiServer的证书和私钥,kube-proxy-csr.json:

1{

2 "CN": "system:kube-proxy",

3 "hosts": [

4 ],

5 "key": {

6 "algo": "rsa",

7 "size": 2048

8 },

9 "names": [

10 {

11 "C": "CN",

12 "ST": "BeiJing",

13 "L": "BeiJing",

14 "O": "system:kube-proxy",

15 "OU": "cloudnative"

16 }

17 ]

18}

- CN 指定该证书的 User为 system:kube-proxy。Kubernetes RBAC定义了ClusterRoleBinding将system:kube-proxy用户与system:node-proxier 角色绑定。system:node-proxier具有kube-proxy组件访问ApiServer的相关权限。

生成证书和私钥:

1cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=frognew kube-proxy-csr.json | cfssljson -bare kube-proxy

2ls kube-proxy*

3kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem

生成kubeconfig文件kube-proxy.conf:

1cd /etc/kubernetes

2export KUBE_APISERVER="https://192.168.61.10:5000"

3# set-cluster

4kubectl config set-cluster kubernetes \

5 --certificate-authority=/etc/kubernetes/pki/ca.pem \

6 --embed-certs=true \

7 --server=${KUBE_APISERVER} \

8 --kubeconfig=kube-proxy.conf

9# set-credentials

10kubectl config set-credentials system:kube-proxy \

11 --client-certificate=/etc/kubernetes/pki/kube-proxy.pem \

12 --embed-certs=true \

13 --client-key=/etc/kubernetes/pki/kube-proxy-key.pem \

14 --kubeconfig=kube-proxy.conf

15# set-context

16kubectl config set-context system:kube-proxy@kubernetes \

17 --cluster=kubernetes \

18 --user=system:kube-proxy \

19 --kubeconfig=kube-proxy.conf

20# set default context

21kubectl config use-context system:kube-proxy@kubernetes --kubeconfig=kube-proxy.conf

创建kube-proxy的systemd unit service文件,注意替换NodeIP变量:

1export KUBE_APISERVER="https://192.168.61.10:5000"

2export NodeIP="192.168.61.11"

3cat > /usr/lib/systemd/system/kube-proxy.service <<EOF

4[Unit]

5Description=kube-proxy

6After=network.target

7

8[Service]

9WorkingDirectory=/var/lib/kube-proxy

10EnvironmentFile=-/etc/kubernetes/kube-proxy

11ExecStart=/usr/local/bin/kube-proxy \

12 --logtostderr=true \

13 --v=0 \

14 --bind-address=${NodeIP} \

15 --kubeconfig=/etc/kubernetes/kube-proxy.conf \

16 --cluster-cidr=10.244.0.0/16

17

18Restart=on-failure

19

20[Install]

21WantedBy=multi-user.target

22EOF

启动kubelet-proxy:

1systemctl daemon-reload

2systemctl enable kube-proxy

3systemctl start kube-proxy

4systemctl status -l kube-proxy

4.4 部署Pod Network插件flannel #

flannel以DaemonSet的形式运行在Kubernetes集群中。 由于我们的etcd集群启用了TLS认证,为了从flannel容器中能访问etcd,我们先把etcd的TLS证书信息保存到Kubernetes的Secret中。

1kubectl create secret generic etcd-tls-secret --from-file=/etc/etcd/ssl/etcd.pem --from-file=/etc/etcd/ssl/etcd-key.pem --from-file=/etc/etcd/ssl/ca.pem -n kube-system

2

3

4kubectl describe secret etcd-tls-secret -n kube-system

5Name: etcd-tls-secret

6Namespace: kube-system

7Labels: <none>

8Annotations: <none>

9

10Type: Opaque

11

12Data

13====

14etcd-key.pem: 1675 bytes

15etcd.pem: 1489 bytes

16ca.pem: 1330 bytes

1mkdir -p ~/k8s/flannel

2cd ~/k8s/flannel

3wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel-rbac.yml

4wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

对kube-flannel.yml做以下修改:

1apiVersion: extensions/v1beta1

2kind: DaemonSet

3metadata:

4 ......

5spec:

6 template:

7 metadata:

8 ......

9 spec:

10 ......

11 containers:

12 - name: kube-flannel

13 image: quay.io/coreos/flannel:v0.7.1-amd64

14 command: [

15 "/opt/bin/flanneld",

16 "--ip-masq",

17 "--kube-subnet-mgr",

18 "-etcd-endpoints=https://192.168.61.11:2379,https://192.168.61.12:2379,https://192.168.61.13:2379",

19 "-etcd-cafile=/etc/etcd/ssl/ca.pem",

20 "--etcd-certfile=/etc/etcd/ssl/etcd.pem",

21 "-etcd-keyfile=/etcd/etcd/ssl/etcd-key.pem",

22 "--iface=etn0" ]

23 securityContext:

24 privileged: true

25 ......

26 volumeMounts:

27 ......

28 - name: etcd-tls-secret

29 readOnly: true

30 mountPath: /etc/etcd/ssl/

31 ......

32 volumes:

33 ......

34 - name: etcd-tls-secret

35 secret:

36 secretName: etcd-tls-secret

- flanneld的启动参数中加入以下参数

-etcd-endpoints配置etcd集群的访问地址-etcd-cafile配置etcd的CA证书,/etc/etcd/ssl/ca.pem从etcd-tls-secret这个Secret挂载--etcd-certfile配置etcd的公钥证书,/etc/etcd/ssl/etcd.pem从etcd-tls-secret这个Secret挂载--etcd-keyfile配置etcd的私钥,/etc/etcd/ssl/etcd-key.pem从etcd-tls-secret这个Secret挂载--iface当Node节点有多个网卡时用于指明具体的网卡名称

下面部署flannel:

1kubectl create -f kube-flannel-rbac.yml

2kubectl create -f kube-flannel.yml

1kubectl get pods --all-namespaces

2NAMESPACE NAME READY STATUS RESTARTS AGE

3kube-system kube-flannel-ds-dhz6m 2/2 Running 0 10s

4

5ifconfig flannel.1

6flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

7 inet 10.244.0.0 netmask 255.255.255.255 broadcast 0.0.0.0

8 ether c2:e6:ad:26:f5:f6 txqueuelen 0 (Ethernet)

9 RX packets 0 bytes 0 (0.0 B)

10 RX errors 0 dropped 0 overruns 0 frame 0

11 TX packets 0 bytes 0 (0.0 B)

12 TX errors 0 dropped 1 overruns 0 carrier 0 collisions 0

4.5 部署kube-dns插件 #

Kubernetes支持kube-dns以Cluster Add-On的形式运行。Kubernetes会在集群中调度一个DNS的Pod和Service。

1mkdir -p ~/k8s/kube-dns

2wget https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/dns/kubedns-cm.yaml

3wget https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/dns/kubedns-sa.yaml

4wget https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/dns/kubedns-svc.yaml.base

5wget https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/dns/kubedns-controller.yaml.base

6wget https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/dns/transforms2sed.sed

查看transforms2sed.sed:

1s/__PILLAR__DNS__SERVER__/$DNS_SERVER_IP/g

2s/__PILLAR__DNS__DOMAIN__/$DNS_DOMAIN/g

3s/__MACHINE_GENERATED_WARNING__/Warning: This is a file generated from the base underscore template file: __SOURCE_FILENAME__/g

将$DNS_SERVER_IP替换成10.96.0.10,将DNS_DOMAIN替换成cluster.local.。

注意$DNS_SERVER_IP要和kubelet设置的--cluster-dns参数一致

执行:

1cd ~/k8s/kube-dns

2sed -f transforms2sed.sed kubedns-svc.yaml.base > kubedns-svc.yaml

3sed -f transforms2sed.sed kubedns-controller.yaml.base > kubedns-controller.yaml

- 上面的变量

DNS_SERVER要和kubelet设置的--cluster-dns参数一致。

1kubectl create -f kubedns-cm.yaml

2kubectl create -f kubedns-sa.yaml

3kubectl create -f kubedns-svc.yaml

4kubectl create -f kubedns-controller.yaml

查看kube-dns的Pod,确认所有Pod都处于Running状态:

1 kubectl get pods --all-namespaces

2NAMESPACE NAME READY STATUS RESTARTS AGE

3kube-system kube-dns-806549836-rv4mm 3/3 Running 0 12s

4kube-system kube-flannel-ds-jnpg9 2/2 Running 0 5m

测试一下DNS功能是否好用:

1kubectl run curl --image=radial/busyboxplus:curl -i --tty

2

3nslookup kubernetes.default

4Server: 10.96.0.10

5Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

6

7Name: kubernetes

8Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

kube-dns是Kubernetes实现服务发现的重要组件之一,默认情况下只会创建一个DNS Pod,在生产环境中我们可能需要对kube-dns进行扩容。 有两种方式:

- 手动扩容

kubectl --namespace=kube-system scale deployment kube-dns --replicas=<NUM_YOU_WANT> - 使用DNS Horizontal Autoscaler

4.6 添加新的Node节点 #

重复4.1~4.3的步骤,添加新的Node节点。 在添加新节点时注意以下事项:

- 4.2中生成kubelet访问ApiServer的证书和私钥,以及基于证书生成kubeconfig文件时,CN用户名使用

system:node:<node-name>。每个Node节点是不同的。 - 4.3中不需要再生成kube-proxy访问ApiServer的证书和私钥,只需将已有Node节点上的kubeconfig文件kube-proxy.conf分发到新的节点上即可。

1kubectl get nodes

2NAME STATUS AGE VERSION

3node1 Ready 2h v1.6.2

4node2 Ready 12m v1.6.2

5node3 Ready 10m v1.6.2

注意生产环境是需要将Master节点隔离的,不建议在Master节点跑工作负载。因为测试环境机器有限,所以这里的Node节点也是node1~node3。

5. dashboard插件部署 #

1mkdir -p ~/k8s/dashboard

2cd ~/k8s/dashboard

3wget https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/kubernetes-dashboard.yaml

4kubectl create -f kubernetes-dashboard.yaml

1kubectl get pod,svc -n kube-system -l app=kubernetes-dashboard

2NAME READY STATUS RESTARTS AGE

3po/kubernetes-dashboard-2457468166-5q9xk 1/1 Running 0 6m

4

5NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

6svc/kubernetes-dashboard 10.102.242.110 <nodes> 80:31632/TCP 6m

6. heapster插件部署 #

下面安装Heapster为集群添加使用统计和监控功能,为Dashboard添加仪表盘。 使用InfluxDB做为Heapster的后端存储,开始部署:

1mkdir -p ~/k8s/heapster

2cd ~/k8s/heapster

3wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/grafana.yaml

4wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/rbac/heapster-rbac.yaml

5wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/heapster.yaml

6wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/influxdb.yaml

7

8kubectl create -f ./

最后确认所有的pod都处于running状态,打开Dashboard,集群的使用统计会以仪表盘的形式显示出来。

7. 部署示例应用 #

接下来部署一个基于微服务的应用,做一个测试:

1kubectl create namespace sock-shop

2kubectl apply -n sock-shop -f "https://github.com/microservices-demo/microservices-demo/blob/master/deploy/kubernetes/complete-demo.yaml?raw=true"

这个应用服务的镜像较多,需要耐心等待:

1kubectl get pod -n sock-shop -o wide

2NAME READY STATUS RESTARTS AGE IP NODE

3carts-153328538-8r4pv 1/1 Running 0 12m 10.244.0.10 node1

4carts-db-4256839670-cw9pr 1/1 Running 0 12m 10.244.0.9 node1

5catalogue-114596073-z2079 1/1 Running 0 12m 10.244.2.5 node3

6catalogue-db-1956862931-ld598 1/1 Running 0 12m 10.244.1.6 node2

7front-end-3570328172-vfj7r 1/1 Running 0 12m 10.244.0.11 node1

8orders-2365168879-h3zwr 1/1 Running 0 12m 10.244.1.7 node2

9orders-db-836712666-954nd 1/1 Running 0 12m 10.244.2.6 node3

10payment-1968871107-9511t 1/1 Running 0 12m 10.244.0.12 node1

11queue-master-2798459664-h99nt 1/1 Running 0 12m 10.244.1.8 node2

12rabbitmq-3429198581-2hvzl 1/1 Running 0 12m 10.244.2.7 node3

13shipping-2899287913-kxt4k 1/1 Running 0 12m 10.244.0.13 node1

14user-468431046-6tf0w 1/1 Running 0 11m 10.244.1.9 node2

15user-db-1166754267-qrqnf 1/1 Running 0 11m 10.244.2.8 node3

1kubectl -n sock-shop get svc front-end

2NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

3front-end 10.103.64.184 <nodes> 80:30001/TCP 13m

大功告成O(∩_∩)O~,在浏览器中打开http://<NodeIP>:30001,浏览一下这个应用吧。